Put an End to False Alarms

Accurate facial recognition can protect against the dangers of false alarms. Discover practical tips for reducing commercial false alarms and avoiding the “cry wolf” syndrome.

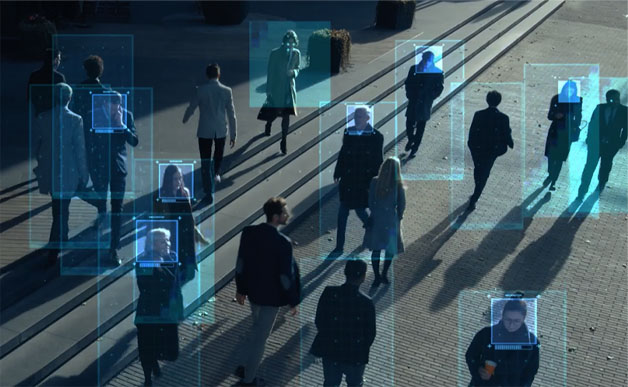

The Impact of Commercial False Alarms

Commercial alarms account for virtually half of the total false alarms responded to by public safety agencies. False alarms cost businesses and the public millions of dollars in wasted money and resources.

When it comes to your video surveillance and watchlist alerting system, there’s a real danger in false alarms.

Cry Wolf Syndrome

Security starts ignoring alerts which open the door.

Tarnished Brand

Negative customer experience when security falsely accuses a good customer.

Job Dissatisfaction

Your security team’s morale takes a hit when they’re forced to continually respond to false alarms and have painful encounters with customers.

Ready to see how Oosto can add intelligence to your video surveillance system?

What Causes False Alarms? Within video surveillance systems, there are two types of false alarms that enterprises should be concerned about: false positives and false negatives.

The cause of false positives and false negatives ultimately comes down to the accuracy of the underlying facial recognition engine. The better and more accurate the engine, the fewer the false alarms.

Within video surveillance systems, there are two types of false alarms that enterprises should be concerned about: false positives and false negatives.

The cause of false positives and false negatives ultimately comes down to the accuracy of the underlying facial recognition engine. The better and more accurate the engine, the fewer the false alarms.

False Positives

The system incorrectly identified a person as a subject from the database.

False Negatives

The system failed, incorrectly, to identify a person as a subject from the database.

7 Tips to Improve Facial Recognition Accuracy

1. Leverage Deep Learning

Historically, facial recognition systems used computer algorithms to pick out specific, distinctive details about a person’s face. These details, such as distance between the eyes or shape of the chin, are then converted into a mathematical representation and compared to data on other faces collected in a face recognition database.

But how this process is actually performed varies widely by solution provider, so it’s important to understand how a vendor performs facial recognition in order to assess whether it’s following ethical best practices. More recently, leading solution providers leverage neural networks to recognize specific faces based on deep learning. These networks assign a unique mathematical vector to a specific person’s face.

2. Train in Real-World Conditions

In the real world, say within a casino or stadium, subjects are far less cooperative and do not look directly at the camera. In these real-world scenarios, we often encounter extreme poses and camera angles, poor image quality, low lighting, or occlusions such as hats, masks, or other coverings.

Neural networks trained under these conditions perform much better in production than models that were built under ideal circumstances – that is, where the person is looking directly at the camera, the camera is at face level, the image quality is crystal clear, and the subject is well lit.

3. Large Data Sets

In the world of facial recognition, neural nets need lots and lots of data to build highly predictive models. This includes images of people from the vast majority of ethnicities, skin colors, races, and genders from around the world. With 50 million images, this gives us a significant capability to address bias since our training data includes large representative populations of different demographics.

4. Data Labeling

In most AI projects, classifying and labeling facial data sets takes a fair amount of time and subject matter expertise. This is a prerequisite for delivering highly accurate facial recognition in real-time.

5. Watchlist Images

It’s imperative that the images of faces stored within the database (your watchlist) are high quality and clear as they are used to compare and reference any detection that goes through the system. We suggest having a few reference images per individual in the database (e.g., one with a full facial view, another with a partial view of the face, and perhaps a third with the person wearing a hat, mask or glasses).

6. Experienced AI/ML Team

Reducing demographic bias and increasing recognition accuracy is also about the people who are developing the AI algorithms and tagging the data sets. When the environmental conditions are challenging, the greater the need for “explainable AI” which requires an AI team that is well versed in this nuanced area of artificial intelligence.

7. Course Correction

After the metadata has been applied and audited, neural networks can cluster images to help find areas where bias may have crept into the model. We’re not just looking at one element such as skin color, we’re looking at a collection or subset of metadata.

How to Find and Test the Most Accurate Video Surveillance Systems

Unfortunately, there is not a Consumer Reports (or even a Gartner report) that provides a short list of credible, highly accurate facial recognition solution providers.

Many commercial customers often turn to NIST’s FRVT report which gets published annually to help identify the most accurate players in the space. And this would make sense if you are evaluating solutions where a static photo of a person is presented and you need to compare that image against a database (watchlist) of photographs.

But, for most surveillance use cases, where video analytics is being leveraged for real-time facial recognition detection, FRVT is wholly inadequate and inappropriate as a benchmark. Learn more about NIST’s FRVT testing and its limitations in this blog post: 5 Limitations of NIST's FRVT Testing for Video Surveillance.

The Oosto Solution: Real-time insights at any scale.

Putting Vision AI to Work

In today's world, organizations face evolving threats to safety and security, and an increasing responsibility to protect employees, customers, and communities. Learn how Oosto protects the people that propel your business.

Watch a 2-minute overviewGet started.

Use the form below to have an Oosto representative contact you to arrange a demo.